How Good is Your Model?

After you train a model. you need to evaluate it on your testing data.

What statistics are most meaningful for:

- Classification models (which produce labels)

- Regression models (which produce numerical value predictions)

Evaluating Classifiers

There are four possible outcomes for a binary classifier:

- True positives (TP) where + is labeled +

- True negative (TN) where - is labeled -

- False positives (FP) where - is labeled +

- False negative (FN) where + is labeled -

Threshold Classifiers

Identifying the best threshold requires deciding on an appropriate evaluation metric.

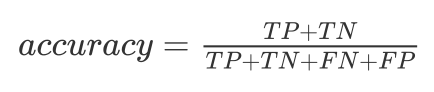

Accuracy

The accuracy is the ratio of correct predictions over total predictions:

The monkey would randomly guess P/N, with accuracy 50%.

Picking the biggest class yields >= 50%.

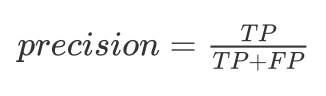

Precision

When |P|<<|N|, accuracy is a silly measure. If only 5% of tests say cancer, are we happy with a 50% accurate monkey?

The monkey would achieve 5% precision, as would a sharp always saying cancer.

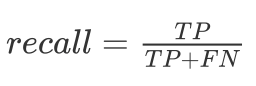

Recall

In the cancer case, we would tolerate some false positive (scares) to identify real cases:

Recall measures being right on positive instances. Saying everyone has cancer gives perfect recall.

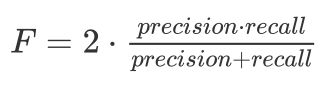

F-Score (best single evaluation choice)

To get a meaningful single score balancing precision and recall use F-score:

The harmonic mean is always less than/equal to the arithmetic mean, making it tough to get a high F-score.

Take Away Lessons

- Accuracy is misleading when the class sizes are substantially different.

- High precision is very hard to achieve in unbalanced class sizes.

- F-score does the best job of any single statistic, but all four work together to describe the performance of a classifier.

Receiver-Operator Curves (ROC)

Varying the threshold changes recall/precision. Area under ROC is a measure of accuracy.

Evaluating Multiclass Systems

Classification gets harder with more classes.

The confusion matrix shows where the miskates are being made

Confusion Matrix: Dating Documents

What periods are most often confused with each other?

The main diagonal is not exactly where the heaviest weight always is.

Scoring Hard Problems Easier

Too low a classification rate is discouraging and often misleading with multiple classes.

The top-k success rate gives you credit if the right label would have been one of your first k quesses.

It is important to pick k so that real improvements can be recognized.

Summary Statistics: Numerical Error

For numerical values, error is a function of the delta between forecast f and observation o:

- Absolute error: (f - o)

- Relative error: (f - o) / o (typically better)

These can be aggregated over many tests:

- Mean or median squared error

- Root mean squared error

Evaluation Data

The best way to assess models involve out-of-sample predictions, results on data you never saw (or even better did not exist) when you built the model.

Partitioning the input into training (60%), testing (20%) and evaluation (20%) data works only if you never open evaluation data until the end.

Sin in Evaluation

Formal evaluation metrics reduce models to a few summary statistics. But many problems can be hidden by statistics:

- Did I mix training and evaluation data?

- Do I have bugs in my implementation?

Revealing such errors requires understanding the types of errors your model makes.

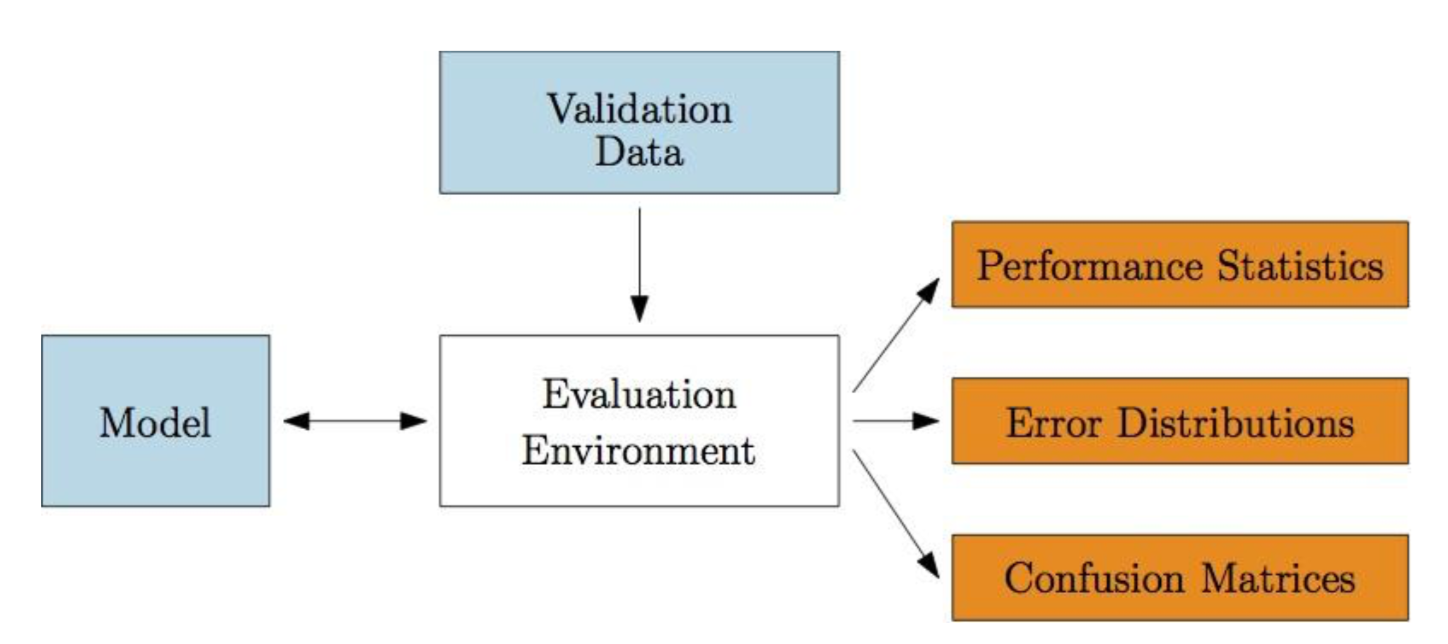

Building an Evaluation Environment

You need a single-command program to run your model on the evaluation data, and produce plots/reports on its effectiveness.

Input: evaluation data with outcome variables

Embedded: function coding current model

Output: summary statistics and distributions of predictions on data vs. outcome variables

Evaluation Environment Architecture

Designing Good Evaluation Systems

- Produce error distributions in addition to binary outcomes (how close was your prediction, not just right or wrong).

- Produce a report with multiple plots / distributions automatically, to read carefully.

- Output relevant summary statistics about performance to quickly gauge quality.

Error Histograms: Dating Documents

Evaluation Environment: Results Table

The Veil of Ignorance

A joke is not funny the second time because you already know the punchline.

Good performance on data you trained models on is very suspect, because models can easily be overfit.

Out of sample predictions are the key to being honest, if you have enough data/time for them.

Cross-Validation

Often we do not have enough data to separate training and evaluation data.

Train on (k - 1)/k th of the data, evaluate on rest, then repeat, and average.

The win here is that you get a variance as to the accuracy of your model!

The limiting case is leave one out validation.

Amplifying Small Evaluation Sets

- Create Negative Examples: when positive examples are rare, all others are likely negative

- Perturb Real Examples: this creates similar but synthetic ones by adding noise

- Give Partial Credit: score by how far they are from the boundary, not just which side

Probability Similarity Measures

There are several measures of distance between probability distributions.

The KL-Divergence or information gain measures information lost replacing P with Q:

$D_{KL}(P||Q)=\sum_i P(i)ln\frac{P(i)}{Q(i)}$

Entropy is a measure of the information in a distribution.

Blackbox vs. Descriptive Models

Ideally models are descriptive, meaning they explain why they are making their decisions.

Linear regression models are descriptive, because one can see which variables are weighed heaviest.

Neural network models are generally opaque.

Lesson: ‘Distinguishing cars from trucks.’

Deep Learning Models are Blackbox

Deep learning models for computer vision are highly-effective, but opaque as to how they make decisions.

They can be badly fooled by images which would never confuse human observers.