Cleaning Data: Garbage In, Garbage Out

Many issues arise in ensuring the sensible analysis of data from the field, including:

- Distinguishing errors from artifacts

- Data compatibility/unification

- Imputation of missing values

- Estimating unobserved (zero) counts

- Outlier detection

Data Compatibility

Data needs to be carefully massaged to make “apple to apple” comparisons:

Unit conversions

NASA’s Mars Climate Orbiter exploded in 1999 due to a metric-to-English conversion issue.

- Even sticking to the metric system has potential inconsistencies: cm, m, km?

- Bimodal distributions can indicate trouble.

- Z-scores are dimensionless quantities.

Vigilance in data integration is essential.

Number/character code representations

The Ariane 5 rocket exploded in 1996 due to a bad 64-bit float to 16-bit integer conversion.

- Measurements should be integers.

- Counts should be integers.

- Fractional quantities should be decimal, not (q, r) like (pounds, oz) or (feet, inches)

Character Representations

A particularly nasty cleaning issue in textual data is unifying character code representations:

- ISO 8859-1 is a single byte code for ASCII

- UTF-8 is a multibyte encoding for all Unicode characters

Name unification

- Use simple transformations to unify names, like lower case, removing middle names, etc.

- Consider phonetic hashing methods like Soundex and Metaphone.

Tradeoff between false positives and negatives.

Time/date unification

Aligning temporal events from different datasets/systems can be problematic.

- Use Coordinated Universal Time (UTC), a modern standard subsuming GMT.

- Financial time series are tricky because of weekends and holidays: how do you correlate stock prices and temperatures?

Financial unification

- Currency conversion uses exchange rates.

- Correct stock prices for splits and dividends.

- Use returns/percentage change instead of absolute price changes.

- The time value of money needs correction for inflation for fair long-term comparisons.

Why do stock/oil prices correlate over 30 years?

Dealing with Missing Data

An important aspect of data cleaning is properly representing missing data:

- What is the year of death of a living person?

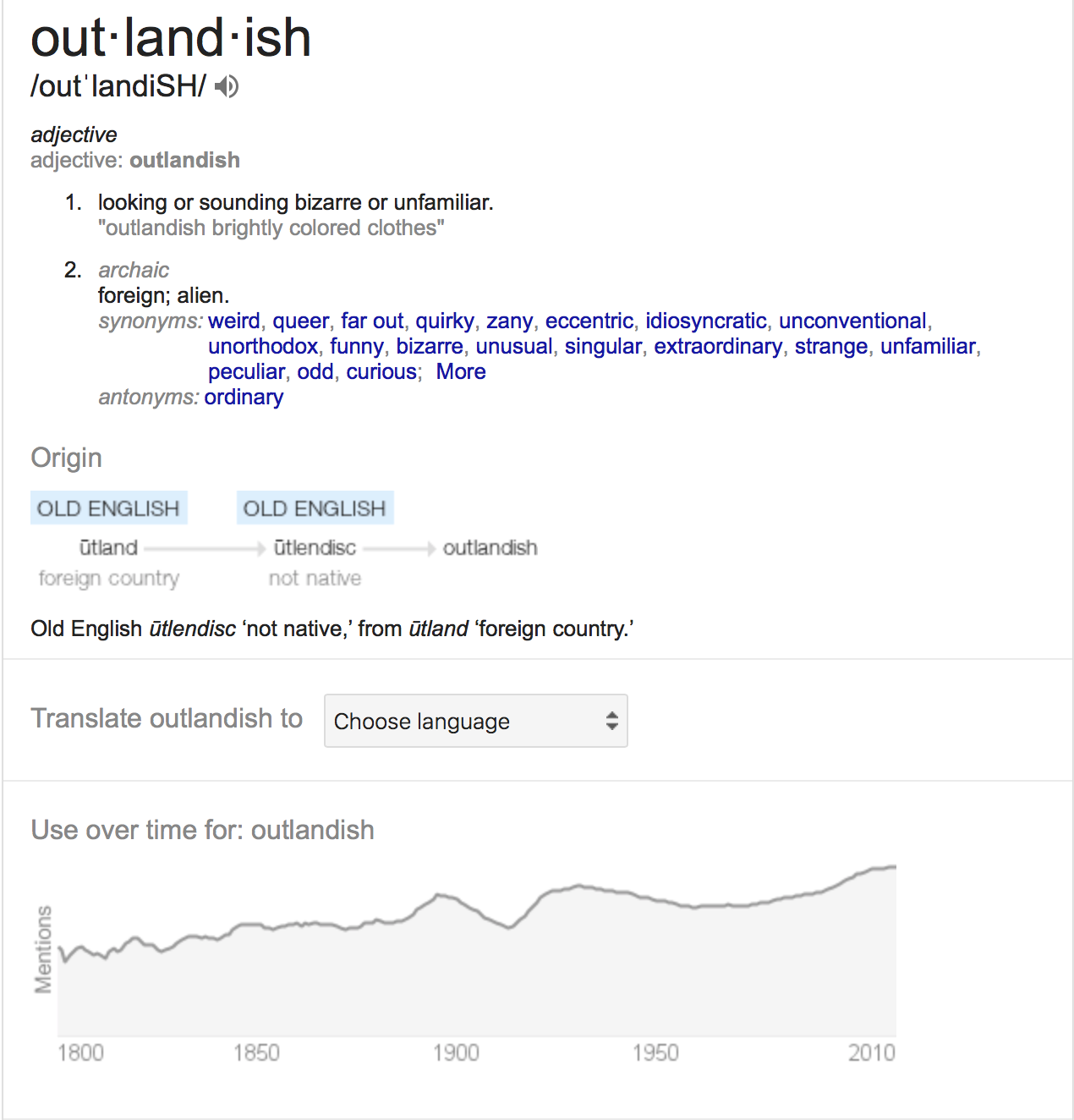

- What about a field left blank or filled with an obviously outlandish value?

- The frequency of events too rare to see?

Setting such values to zero is generally wrong

Imputing Missing Values

With enough training data, one might drop all records with missing values, but we may want to use the model on records with missing fields.

Often it is better to estimate or imput missing values instead of leaving them blank.

A good guess for your death year is birth + 80.

Imputation Methods

Mean value imputation -

leaves mean same.

Random value imputation -

repreatedly selecting random values permits statistical evaluation of the impact of imputation.

Imputation by interpolation -

using linear regression to predict missing values works well if few fields are missing per record.

Outlier Detection

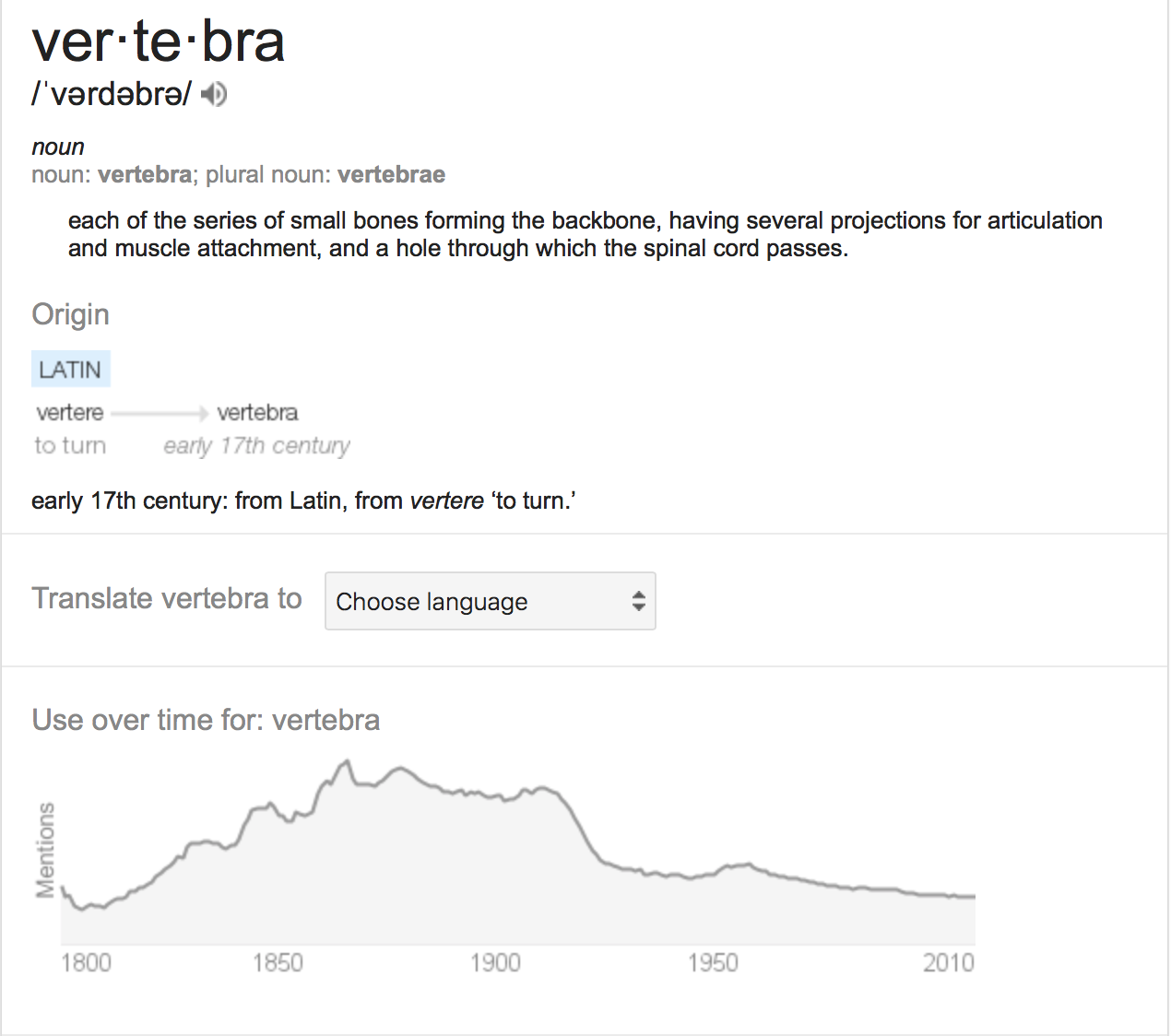

The largest reported dinosaur vertebra is 50% larger than all others: presumably a data error.

- Look critically at the maximum and minimum values for all variables.

- Normally distributed data should not have large outliers, k sigma from the mean.

Fix why you have an outlier. Don’t just delete.

Detecting Outliers

- Visually, it is easy to detect outliers, but only in low dimensional spaces.

- It can be thought of as an unsupervised learning problem, like clustering.

- Points which are far from their cluster center are good candidates for outliers.

Dekete Outliers Prior to Fitting?

- Deleting outliers prior to fitting can

yield better models, e.g. if these points correspond to measurement error. - Deleting outliers prior to fitting can

yield worse models. e.g., if you are simply deleting points which are not explained by your simple model.

Vocabularies

[1]

[2]

[3]