MapReduce Related Concepts

Summary

- Cluster Computing:

- Compute nodes are processor chip, main memory, and disk.

- Cluster computing is a common architecture that has a cluster of compute nodes.

- Compute nodes are mounted in racks.

- Racks are connected by a high-speed network or switch.

- Distributed File Systems:

- In the distributed file system, files are composed of chunks of 64 MB, and each chunk is replicated several times on different compute nodes or racks.

- MapReduce:

- MapReduce can be a programming style or a programming system.

- The Map Function:

- This function is written by the programmer(user).

- It takes a collection of input objects and turns each into zero or more key-value pairs.

- The Reduce Function:

- A MapReduce programming system sorts all the key-value pairs produced by all the Map tasks, forms all the values associated with a given key into a list and distributes key-list pairs to Reduce tasks.

Distributed File Systems

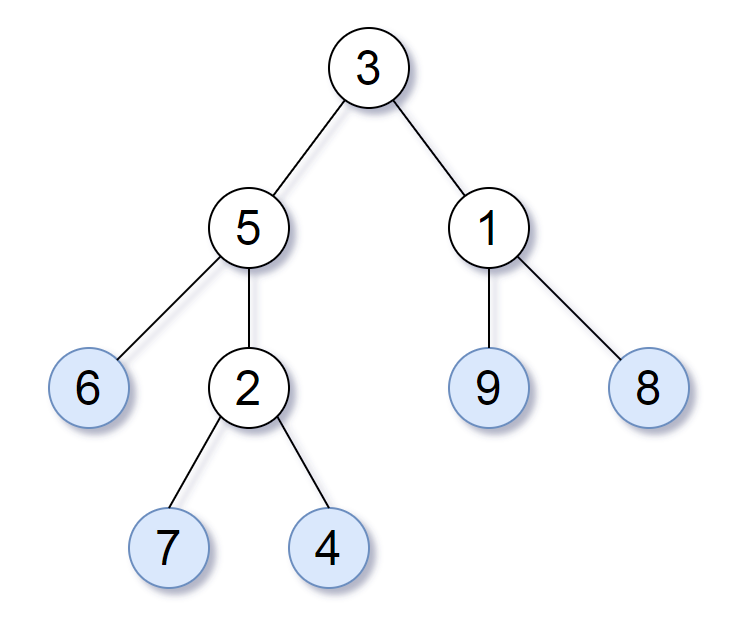

Physical Organization of Compute Nodes

Cluster computing is one type of parallel-computing architecture. Compute nodes are stored on racks, perhaps 8~64 on a rack. The nodes on a single rack are connected by a network. There can be many racks of compute nodes, and racks are connected by another level of network or a switch.

DFS Implementations

There are several DFS that are used in practice. For example, the Google File System (GFS); Hadoop Distributed File System (HDFS), an open-source DFS used with Hadoop, an implementation of MapReduce; Colossus, an improved version of GFS.

Large-Scale File-System Organization

- File should be large, i.e. with 100GB ~ 1TB size.

- Files are rarely updated.

Files are divided into chunks, which are read typically 64 MB in size. Chunks are replicated, usually three times, at three different comput nodes. Moreover, the nodes holding copies of one chunk should be located on different racks.

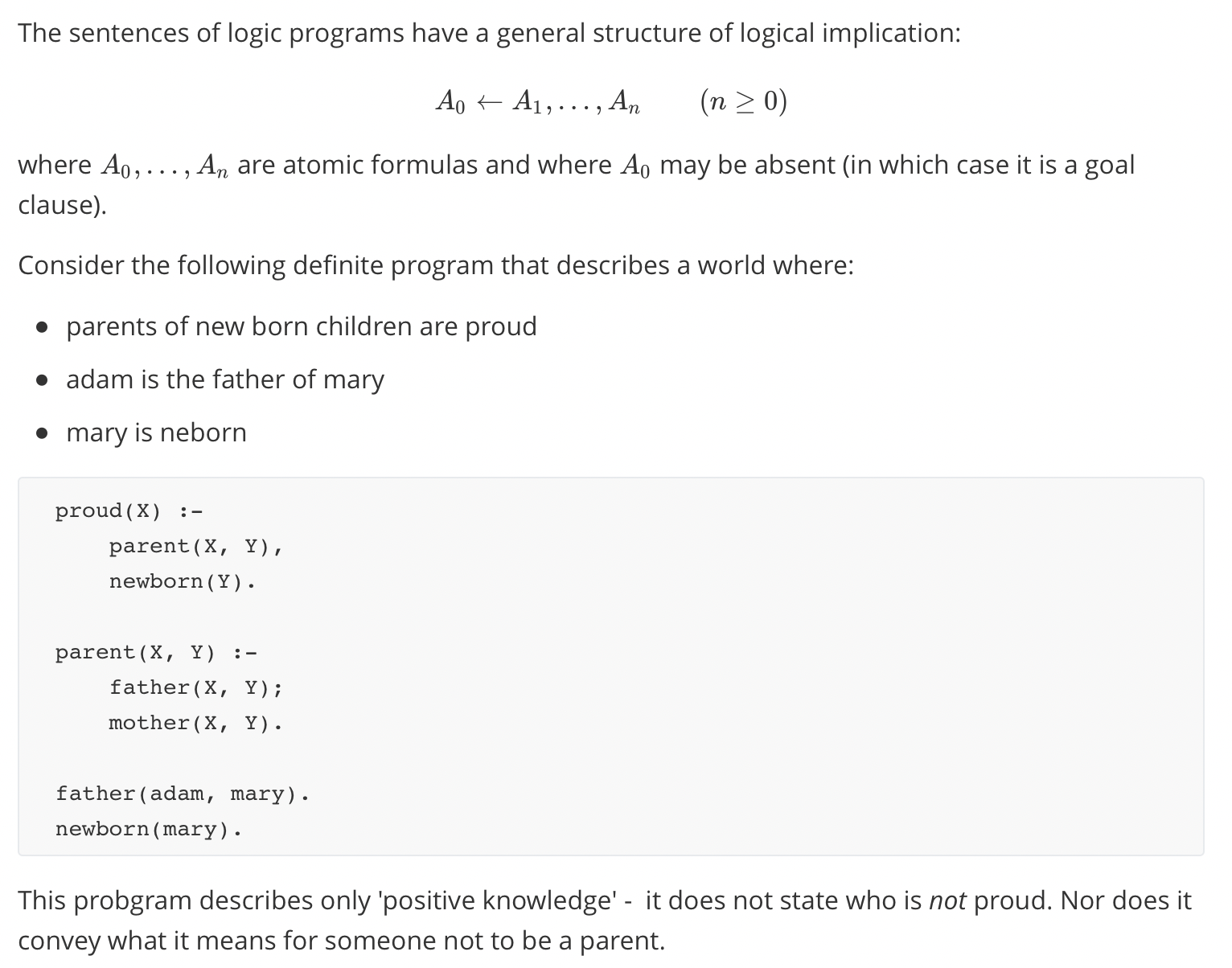

MapReduce

In brief, a MapReduce computation executes as follows:

- Some # of Map tasks each are given one or more chunks from a distributed file system. These Map tasks turn the chunk into a sequence of key-value pairs.

- The key-value pairs from each Map task are collected by a master controller and sorted by key. The keys are divided among all the Reduce tasks, so all key-value pairs with the same key wind up at the same Reduce task.

- The Reduce tasks work on one key at a time, and combine all the values associated with that key in some way.

The Map Tasks

- We view input files for a Map task as consisting of elements, which can be any type: a tuple or a document.

- Technically, all inputs to Map tasks and outputs from Reduce tasks are of the key-value-pair form, but normally the keys of input elements are not relevant and we shall tend to ignore them.

- Insisting on this form for inputs and outputs is motivated by the desire to allow composition of several MapReduce processes.

Grouping by Key

- As soon as the Map tasks have all completed successfully, the key-value pairs are grouped by key, and the values associated with each key are formed into a single list of values for that key.

- The grouping is performed by the sysem, regardless of what the Map and Reduce tasks do.

- The master controller process knows how many Reduce tasks there will be.

- Then the master controller picks a hash function that takes a key as argument and produces a bucket number from 0 to r - 1, where r is the number of Reduce tasks.

- To perform the grouping by key and distribution to the Reduce tasks, the master controller merges the files from each Map task that are destined for a particular Reduce task and feeds the merged file to that process as a sequence of key/list-of-values pairs.

The Reduce Tasks

- We shall refer to the application of the Reduce function to a single key and its associated list of values as a reducer.

- A Reduce task receives one or more keys and their assoicated value lists. That is, a Reduce task executes one or more reducers.

- The outputs from all the Reduce tasks are merged into a single file.

Combiners

- Sometimes, a Reduce function is associative and commutative.

- The values to be combined can be combined in any order with the same result.

- When the Reduce function is associative and commutative, we can push some of what the reducers do to the Map tasks.

Reducers, Reduce Tasks, Compute Nodes, and Skew

- If we want maximum parallelism, then we chould use one Reduce task to execute each reducer, i.e., a single key and its associated value list.

- We could execute each Reduce task at a different compute node, so they would all execute in parallel.

Details of MapReduce Execution

References

[1] Mining of Massive Datasets

[2] Hadoop: Distributed Architecture, HDFS, MapReduce2-11.pdf)